2019年12月12号,pivotal 发布gp6.2.1,适逢公司gp集群扩建升级,需要确定版本,所以安装gp6的版本与gp5做比对测试。本文档参考官方文档,按照官方标准步骤一步一步安装完成。文档中列举了gp6 与旧版本安装的差异点。

1.软硬件说明及必要依赖安装

1.1 软硬件说明

- 系统版本:redhat6.8

- 硬件:3台虚拟机,2核,16G内存,50G硬盘

- 实验节点规划一个master, 4个segment,4个mirror,无standby

| 主机ip | host | 节点规划 |

| 172.28.25.201 | mdw | master |

| 172.28.25.202 | sdw1 | seg1,seg2,mirror3,mirror4 |

| 172.28.25.203 | sdw2 | seg3,seg4,mirror1,mirror2 |

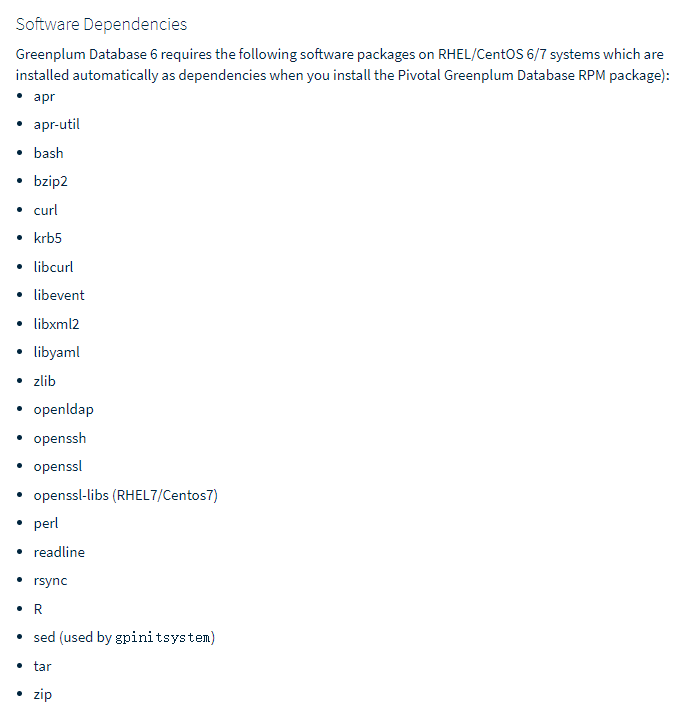

1.2 必要依赖安装

与旧版本差异点

gp4.x 无安装依赖检查步骤

gp5.x 使用rpm安装需要检查安装依赖

gp6.2 使用rpm需要检查安装依赖,使用yum install安装 会自动安装依赖,前提条件是需要联网

GP6.X RPM版本安装前需要检查软件依赖,安装过程需要联网,若为内网机,需要先下载好相应的包。

1.2.1 批量安装依赖包(需联网)

greenplum 5 是用rpm命令的,而greenplum 6 则用 yum install 直接安装依赖。

sudo yum install -y apr apr-util bash bzip2 curl krb5 libcurl libevent libxml2 libyaml zlib openldap openssh openssl openssl-libs perl readline rsync R sed tar zip krb5-devel 1.2.2 内网机需要人工下载后再上传至服务器

注意:操作系统版本位数 ,例如本次虚拟机是: el6.x86_64

[root@mdw ~]# uname -a

Linux mdw 2.6.32-642.el6.x86_64 #1 SMP Wed Apr 13 00:51:26 EDT 2016 x86_64 x86_64 x86_64 GNU/Linux下载地址: http://rpmfind.net/linux/rpm2html/search.php

1.2.3 linux中离线下载

条件:

1. 与安装gp 集群相同版本的操作系统

2. 可联外网

yumdownloader --destdir ./ --resolve libyaml 2 配置系统参数

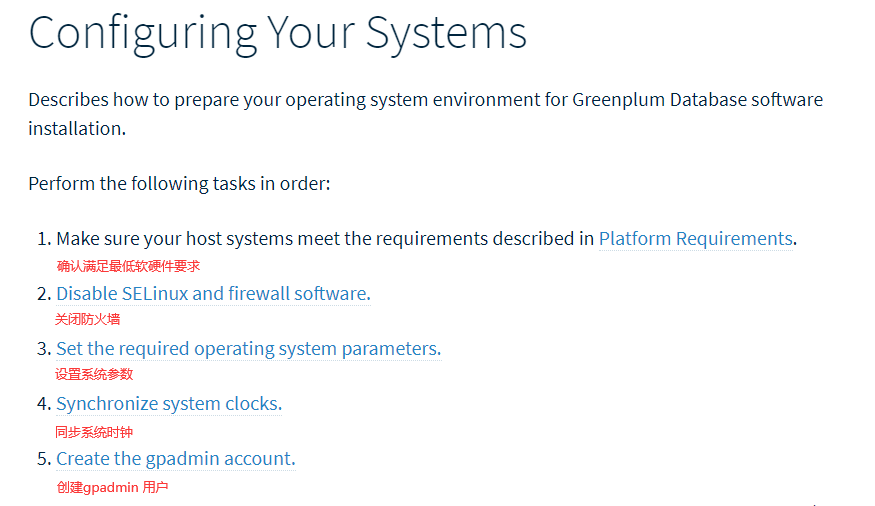

## 与旧版本差异点

gp6 无gpcheck 检查工具,但在gpinitsystem 环节会检查系统参数。 若不按照官方推荐参数修改,不影响集群安装,会影响集群性能

- 系统参数需要使用root用户修改,修改完需要重启系统,也可以修改完成后一并重启系统。

- 建议先修改master主机的参数,待安装好master的gp后,打通ssh,使用gpscp ,gpssh 批量修改其他节点的系统参数

- 参考文档:https://gpdb.docs.pivotal.io/6-2/install_guide/prep_os.html

2.1 关闭防火墙

2.1.1 检查SElinux(Security-Enhanced Linux)

使用root用户查看

[root@mdw ~]# sestatus

SELinux status: disabled如果 SELinux status != disabled ,修改 /etc/selinux/config 设置 ,随后重启系统(可以调节完参数后一并重启)

SELINUX=disabled2.1.2 检查 iptables 状态

[root@mdw ~]# /sbin/chkconfig --list iptables

iptables 0:off 1:off 2:off 3:off 4:off 5:off 6:off如果状态没关闭,则修改,随后重启系统(可以调节完参数后一并重启)

/sbin/chkconfig iptables off2.1.3 检查firewalld(centos6 一般没有)

[root@mdw ~]# systemctl status firewalld如果firewalld 关闭,则输出

* firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)如果状态没关闭,则修改,随后重启系统(可以调节完参数后一并重启)

[root@mdw ~]# systemctl stop firewalld.service

[root@mdw ~]# systemctl disable firewalld.service2.2 配置host

2.2.1 配置每台机器host

配置master hostname 为mdw, 其他segment 主机的hostname 不是必须配置项。

修改各台主机的主机名称。

一般建议的命名规则如下:

Master :mdw

Standby Master :smdw

egment Host :sdw1、 sdw2 … sdwn

修改操作:

#零时修改

hostname mdw

#永久修改

vi /etc/sysconfig/network2.2.2 配置/etc/hosts

#添加每台机器的ip 和别名

[root@mdw ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.28.25.201 mdw

172.28.25.202 sdw1

172.28.25.203 sdw2

#修改集群中所有主机的hosts 文件,登陆到各个主机,执行一下语句:

cat >> /etc/hosts << EOF

172.28.25.201 mdw

172.28.25.202 sdw1

172.28.25.203 sdw2

EOF2.3 配置sysctl.conf

根据系统实际情况来修改系统参数(gp 5.0 之前都是官方给出的默认值,5.0 之后给出了部分计算公式。)

官方推荐配置,设置完成后 重载参数( sysctl -p):

# kernel.shmall = _PHYS_PAGES / 2 # See Shared Memory Pages # 共享内存

kernel.shmall = 4000000000

# kernel.shmmax = kernel.shmall * PAGE_SIZE # 共享内存

kernel.shmmax = 500000000

kernel.shmmni = 4096

vm.overcommit_memory = 2 # See Segment Host Memory # 主机内存

vm.overcommit_ratio = 95 # See Segment Host Memory # 主机内存

net.ipv4.ip_local_port_range = 10000 65535 # See Port Settings 端口设定

kernel.sem = 500 2048000 200 40960

kernel.sysrq = 1

kernel.core_uses_pid = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.msgmni = 2048

net.ipv4.tcp_syncookies = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.tcp_max_syn_backlog = 4096

net.ipv4.conf.all.arp_filter = 1

net.core.netdev_max_backlog = 10000

net.core.rmem_max = 2097152

net.core.wmem_max = 2097152

vm.swappiness = 10

vm.zone_reclaim_mode = 0

vm.dirty_expire_centisecs = 500

vm.dirty_writeback_centisecs = 100

vm.dirty_background_ratio = 0 # See System Memory # 系统内存

vm.dirty_ratio = 0

vm.dirty_background_bytes = 1610612736

vm.dirty_bytes = 4294967296# 共享内存

# kernel.shmall = _PHYS_PAGES / 2

# kernel.shmmax = kernel.shmall * PAGE_SIZE

[root@mdw ~]# echo $(expr $(getconf _PHYS_PAGES) / 2)

2041774

[root@mdw ~]# echo $(expr $(getconf _PHYS_PAGES) / 2 \* $(getconf PAGE_SIZE))

8363106304#主机内存

vm.overcommit_memory 系统使用该参数来确定可以为进程分配多少内存。对于GP数据库,此参数应设置为2。

vm.overcommit_ratio 以为进程分配内的百分比,其余部分留给操作系统。在Red Hat上,默认值为50。建议设置95

#计算 vm.overcommit_ratio

vm.overcommit_ratio = (RAM-0.026*gp_vmem) / RAM#端口设定

为避免在Greenplum初始化期间与其他应用程序之间的端口冲突,指定的端口范围 net.ipv4.ip_local_port_range。

使用gpinitsystem初始化Greenplum时,请不要在该范围内指定Greenplum数据库端口。

例如,如果net.ipv4.ip_local_port_range = 10000 65535,将Greenplum数据库基本端口号设置为这些值。

PORT_BASE = 6000

MIRROR_PORT_BASE = 7000

# 系统内存

系统内存大于64G ,建议以下配置

vm.dirty_background_ratio = 0

vm.dirty_ratio = 0

vm.dirty_background_bytes = 1610612736 # 1.5GB

vm.dirty_bytes = 4294967296 # 4GB

系统内存小于等于 64GB,移除vm.dirty_background_bytes 设置,并设置以下参数

vm.dirty_background_ratio = 3

vm.dirty_ratio = 10

增加 vm.min_free_kbytes ,确保网络和存储驱动程序PF_MEMALLOC得到分配。这对内存大的系统尤其重要。一般系统上,默认值通常太低。可以使用awk命令计算vm.min_free_kbytes的值,通常是建议的系统物理内存的3%:

awk 'BEGIN {OFMT = "%.0f";} /MemTotal/ {print "vm.min_free_kbytes =", $2 * .03;}' /proc/meminfo >> /etc/sysctl.conf 不要设置 vm.min_free_kbytes 超过系统内存的5%,这样做可能会导致内存不足

本次实验使用redhat6.8 ,16G内存,配置如下:

[root@mdw ~]# vi /etc/sysctl.conf

[root@mdw ~]# sysctl -p

kernel.shmall = 2041774

kernel.shmmax = 8363106304

kernel.shmmni = 4096

vm.overcommit_memory = 2

vm.overcommit_ratio = 95

net.ipv4.ip_local_port_range = 10000 65535

kernel.sem = 500 2048000 200 40960

kernel.sysrq = 1

kernel.core_uses_pid = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.msgmni = 2048

net.ipv4.tcp_syncookies = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.tcp_max_syn_backlog = 4096

net.ipv4.conf.all.arp_filter = 1

net.core.netdev_max_backlog = 10000

net.core.rmem_max = 2097152

net.core.wmem_max = 2097152

vm.swappiness = 10

vm.zone_reclaim_mode = 0

vm.dirty_expire_centisecs = 500

vm.dirty_writeback_centisecs = 100

vm.dirty_background_ratio = 3

vm.dirty_ratio = 102.4 系统资源限制

修改/etc/security/limits.conf ,增加以下参数:

* soft nofile 524288

* hard nofile 524288

* soft nproc 131072

* hard nproc 131072- “*” 星号表示所有用户

- noproc 是代表最大进程数

- nofile 是代表最大文件打开数

- RHEL / CentOS 6 修改:/etc/security/limits.d/90-nproc.conf 文件的nproc 为131072

- RHEL / CentOS 7 修改:/etc/security/limits.d/20-nproc.conf 文件的nproc 为131072

[root@mdw ~]# cat /etc/security/limits.d/90-nproc.conf

# Default limit for number of user's processes to prevent

# accidental fork bombs.

# See rhbz #432903 for reasoning.

* soft nproc 131072

root soft nproc unlimitedlinux模块pam_limits 通过读取 limits.conf文件来设置用户限制.

重启后生效,ulimit -u 命令显示每个用户可用的最大进程数max user processes。验证返回值为131072。

2.5 XFS挂载选项

XFS相比较ext4具有如下优点:

- XFS的扩展性明显优于ext4,ext4的单个文件目录超过200W个性能下降明显

- ext4作为传统文件系统确实非常稳定,但是随着存储需求的越来越大,ext4渐渐不在适应

- 由于历史磁盘原因,ext4的inode个数限制(32位),最多只能支持40多亿个文件,单个文件最大支持到16T

- XFS使用的是64位管理空间,文件系统规模可以达到EB级别,XFS是基于B+Tree管理元数据

GP 需要使用XFS的文件系统,RHEL/CentOS 7 和Oracle Linux将XFS作为默认文件系统,SUSE/openSUSE已经为XFS做了长期支持。由于本次虚拟机只有一块盘,并且是系统盘,无法再改文件系统。此处略过挂在xfs。

## 与旧版本差异点

gp6 无gpcheck 检查工具,所以不改文件系统,不影响集群安装

gp6 之前版本 gpcheck检查文件系统不通过时,可注释掉gpcheck脚本检查文件系统的部分代码。

例如挂载新xfs步骤:

1 、 分区及格式化:

mkfs.xfs /dev/sda3 mkdir -p /data/master 2、 在/etc/fstab 文件中增加

/dev/sda3 /data xfs rw,noatime,inode64,allocsize=16m 1 1 xfs的更多资料参考:

https://blog.csdn.net/marxyong/article/details/88703416

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/storage_administration_guide/ch-xfs

2.6 磁盘I/O 设置

磁盘文件预读设置:16384,不同系统的磁盘目录不一样,可以使用 lsblk 查看磁盘挂在情况

[root@mdw ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 200M 0 part /boot

└─sda2 8:2 0 49.8G 0 part

├─VolGroup-lv_swap (dm-0) 253:0 0 4G 0 lvm [SWAP]

└─VolGroup-lv_root (dm-1) 253:1 0 45.8G 0 lvm /

sr0 11:0 1 1024M 0 rom

#本次生效

[root@mdw ~]# /sbin/blockdev --setra 16384 /dev/sda

[root@mdw ~]# /sbin/blockdev --getra /dev/sda

16384

#永久生效,需要将上面的脚本追加到 /etc/rc.d/rc.local 中2.7 修改rc.local 权限

必须在启动时可以运行 rc.local文件。例如,在RHEL / CentOS 7系统上,设置文件的执行权限。

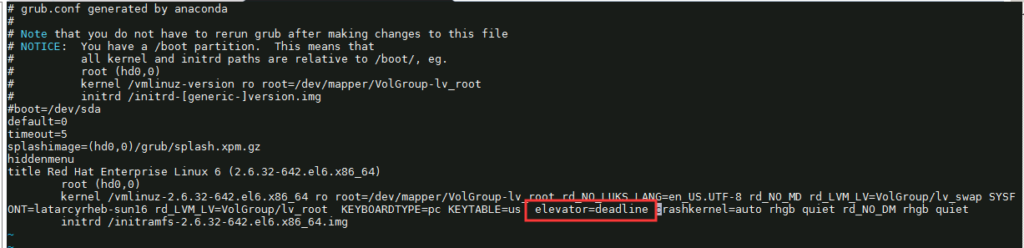

chmod + x /etc/rc.d/rc.local2.8 磁盘I/O调度算法

[root@mdw ~]# more /sys/block/sda/queue/scheduler

noop anticipatory deadline [cfq]

[root@mdw ~]# echo deadline > /sys/block/sda/queue/scheduler这样做并不能永久生效,每次重启都需要重新设置。

RHEL 6.x or CentOS 6.x 可以修改 /boot/grub/grub.conf,增加 elevator=deadline 例如:

RHEL 7.x or CentOS 7.x, 使用 grub2 ,可以使用系统工具grubby来修改;

grubby --update-kernel=ALL --args="elevator=deadline"

#重启后使用一下命令检查

grubby --info=ALL2.9 Transparent Huge Pages (THP

禁用THP,因为它会降低Greenplum数据库的性能。RHEL 6.x or CentOS 6.x 或更高版本默认情况下启用THP。

在RHEL 6.x上禁用THP的一种方法是添加参数 transparent_hugepage=never 到/boot/grub/grub.conf:

kernel /vmlinuz-2.6.18-274.3.1.el5 ro root=LABEL=/

elevator=deadline crashkernel=128M@16M quiet console=tty1

console=ttyS1,115200 panic=30 transparent_hugepage=never

initrd /initrd-2.6.18-274.3.1.el5.imgRHEL 7.x or CentOS 7.x, 使用 grub2 ,可以使用系统工具grubby来修改:

grubby --update-kernel = ALL --args =“ transparent_hugepage =never”

#添加参数后,重启系统。

#参数检查:

cat /sys/kernel/mm/*transparent_hugepage/enabled

always [never]2.10 IPC Object Removal

Disable IPC object removal for RHEL 7.2 or CentOS 7.2, or Ubuntu. The default systemd setting RemoveIPC=yes removes IPC connections when non-system user accounts log out. This causes the Greenplum Database utility gpinitsystem to fail with semaphore errors. Perform one of the following to avoid this issue.

- When you add the gpadmin operating system user account to the master node in Creating the Greenplum Administrative User, create the user as a system account.

- Disable RemoveIPC. Set this parameter in /etc/systemd/logind.conf on the Greenplum Database host systems.

RemoveIPC=no

The setting takes effect after restarting the systemd-login service or rebooting the system. To restart the service, run this command as the root user.

service systemd-logind restart

2.11 SSH连接阈值

Greenplum数据库管理程序中的gpexpand,gpinitsystem、gpaddmirrors,使用 SSH连接来执行任务。在规模较大的Greenplum集群中,程序的ssh连接数可能会超出主机的未认证连接的最大阈值。

发生这种情况时,会收到以下错误:

ssh_exchange_identification:连接被远程主机关闭。

为避免这种情况,可以更新 /etc/ssh/sshd_config 或者 /etc/sshd_config 文件的 MaxStartups 和 MaxSessions 参数

MaxStartups 200

MaxSessions 200

重启sshd,使参数生效

service sshd restart

2.12 同步集群时钟(NTP)

为了保证集群各个服务的时间一致,首先在master 服务器上,编辑 /etc/ntp.conf,配置时钟服务器为数据中心的ntp服务器。若没有,先修改master 服务器的时间到正确的时间,再修改其他节点的 /etc/ntp.conf,让他们跟随master服务器的时间。

vi /etc/ntp.conf

#在server 最前面加上

server mdw prefer # 优先主节点

server smdw # 其次standby 节点,若没有standby ,可以配置成数据中心的时钟服务器

service ntpd restart # 修改完重启ntp服务2.13 检查字符集

[root@mdw greenplum-db]# echo $LANG

en_US.UTF-8否则,修改配置 /etc/sysconfig/language 增加 RC_LANG=en_US.UTF-8

2.14 创建gpadmin用户

# 与旧版本差异点

gp4.x/gp5.x 可以在gpseginstall 时,通过-U 参数创建gpamdin 用户

gp6.2 无gpseginstall 工具,必须在安装前创建gpadmin 用户

在每个节点上创建gpadmin用户,用于管理和运行gp集群,最好给与sudo权限。也可以先在主节点上创建,等到主节点gp安装完成后,使用gpssh 批量在其他节点上创建。示例:

[root@mdw ~]# groupadd gpadmin

[root@mdw ~]# useradd gpadmin -r -m -g gpadmin

[root@mdw ~]# passwd gpadmin3. 集群软件安装

参考:https://gpdb.docs.pivotal.io/6-2/install_guide/install_gpdb.html

# 与旧版本差异点

gp4.x/gp5.x 以前安装分为四部分

1. 安装master(一般是个bin的可执行文件,安装,并可以指定安装目录)

2. gpseginstall 安装各个seg

3. gp群参数校验

4. gpinitsystem 集群初始化

gp6.2 开始不提供zip 格式压缩包,仅提供rpm包

1. 安装master(rpm -ivh / yum install -y),不可以指定安装目录,默认安装到/usr/local/

2. gp6 没有 gpseginstall工具。所以要么自己打包master 安装好的gp目录并传到seg上,要么各个节点单独yum 安装。

步骤:

1.每个节点主机,单独yum

2.打包主节点的安装目录,并分发给seg主机。

3. 集群性能校验

4. gpinitsystem 集群初始化

3.1 执行安装程序

#执行安装脚本,默认安装到/usr/local/ 目录下。

yum install -y ./greenplum-db-6.2.1-rhel6-x86_64.rpm

#或者使用rpm 安装

rpm -ivh greenplum-db-6.2.1-rhel6-x86_64.rpm本次测试是内网机,无法联网下载所有的依赖包,也没有提前外网下载好依赖包。而是等安装时缺什么,再下载什么。现在缺少 libyaml,下载并上传至服务器,安装后再试运行gp安装程序。libyaml下载地址 http://rpmfind.net/linux/rpm2html/search.php?query=libyaml(x86-64)

[root@mdw gp_install_package]# yum install -y ./greenplum-db-6.2.1-rhel6-x86_64.rpm

Loaded plugins: product-id, refresh-packagekit, search-disabled-repos, security, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

Setting up Install Process

Examining ./greenplum-db-6.2.1-rhel6-x86_64.rpm: greenplum-db-6.2.1-1.el6.x86_64

Marking ./greenplum-db-6.2.1-rhel6-x86_64.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package greenplum-db.x86_64 0:6.2.1-1.el6 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

=======================================================================================================================================================

Package Arch Version Repository Size

=======================================================================================================================================================

Installing:

greenplum-db x86_64 6.2.1-1.el6 /greenplum-db-6.2.1-rhel6-x86_64 493 M

Transaction Summary

=======================================================================================================================================================

Install 1 Package(s)

Total size: 493 M

Installed size: 493 M

Downloading Packages:

Running rpm_check_debug

Running Transaction Test

Transaction Test Succeeded

Running Transaction

Warning: RPMDB altered outside of yum.

Installing : greenplum-db-6.2.1-1.el6.x86_64 1/1

Verifying : greenplum-db-6.2.1-1.el6.x86_64 1/1

Installed:

greenplum-db.x86_64 0:6.2.1-1.el6

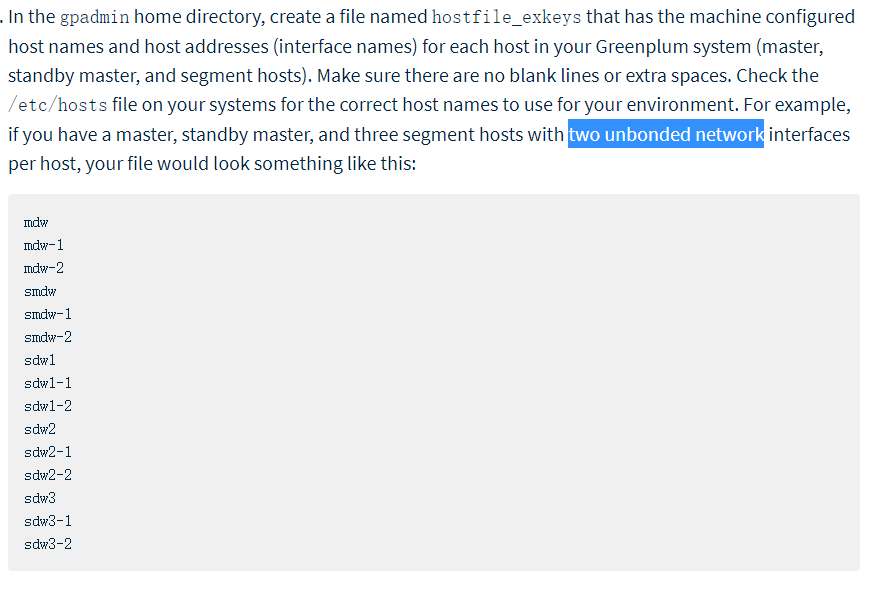

Complete!3.2 创建hostfile_exkeys

在$GPHOME目录创建两个host文件(all_host,seg_host),用于后续使用gpssh,gpscp 等脚本host参数文件

all_host : 内容是集群所有主机名或ip,包含master,segment,standby等。

seg_host: 内容是所有 segment主机名或ip

若一台机器有多网卡,且网卡没有绑定成bond0模式时,需要将多网卡的ip 或者host都列出来。

[root@mdw ~]# cd /usr/local/

[root@mdw local]# ls

bin etc games greenplum-db greenplum-db-6.2.1 include lib lib64 libexec openssh-6.5p1 sbin share src ssl

[root@mdw local]# cd greenplum-db

[root@mdw greenplum-db]# ls

bin docs etc ext greenplum_path.sh include lib open_source_license_pivotal_greenplum.txt pxf sbin share

[root@mdw greenplum-db]# vi all_host

[root@mdw greenplum-db]# vi seg_host

[root@mdw greenplum-db]# cat all_host

mdw

sdw1

sdw2

[root@mdw greenplum-db]# cat seg_host

sdw1

sdw2

#修改文件夹权限

[root@mdw greenplum-db]# chown -R gpadmin:gpadmin /usr/local/greenplum*

3.3 集群互信,免密登陆

## 与旧版本差异点

gp6.x 以前无需3.3.1 ssh-keygen生成密钥,3.3.2 的ssh-copy-id 步骤,直接gpssh-exkeys -f all_host。

3.3.1 生成密钥

# 我的Linux还没有公私钥对,所以,要先生成一个

[root@gjzq-sh-mb greenplum-db]# ssh-keygen3.3.2 将本机的公钥复制到各个节点机器的authorized_keys文件中

[root@gjzq-sh-mb greenplum-db]# ssh-copy-id sdw1

[root@gjzq-sh-mb greenplum-db]# ssh-copy-id sdw23.3.3 使用gpssh-exkeys 工具,打通n-n的免密登陆

[root@gjzq-sh-mb greenplum-db]# gpssh-exkeys -f all_host

Problem getting hostname for gpzq-sh-mb: [Errno -3] Temporary failure in name resolution

Traceback (most recent call last):

File "/usr/local/greenplum-db/./bin/gpssh-exkeys", line 409, in <module>

(primary, aliases, ipaddrs) = socket.gethostbyaddr(hostname)

socket.gaierror: [Errno -3] Temporary failure in name resolution

# 没有做章节( 2.2.1 配置每台机器host )的内容,导致上述错误

[root@gjzq-sh-mb greenplum-db]# hostname mdw

[root@gjzq-sh-mb greenplum-db]# gpssh-exkeys -f all_host

[STEP 1 of 5] create local ID and authorize on local host

... /root/.ssh/id_rsa file exists ... key generation skipped

[STEP 2 of 5] keyscan all hosts and update known_hosts file

[STEP 3 of 5] retrieving credentials from remote hosts

... send to sdw1

... send to sdw2

[STEP 4 of 5] determine common authentication file content

[STEP 5 of 5] copy authentication files to all remote hosts

... finished key exchange with sdw1

... finished key exchange with sdw2

[INFO] completed successfully

3.3.4 验证gpssh

官方给出的验证目录是 ,/usr/local/greenplum-db-<version>。

gpssh -f hostfile_exkeys -e ‘ls -l /usr/local/greenplum-db-<version>’

按照一般的安装步骤,测试集群各个 segment主机还没安装 gp程序,也就没有

/usr/local/greenplum-db-<version> 目录,此时用任意 shell 验证下gpssh是否可用即可。

[root@mdw ~]# source /usr/local/greenplum-db/greenplum_path.sh

[root@mdw ~]# gpssh -f /usr/local/greenplum-db/all_host -e 'ls /usr/local/'

[ mdw] ls /usr/local/

[ mdw] bin games greenplum-db-6.2.1 lib libexec sbin src

[ mdw] etc greenplum-db include lib64 openssh-6.5p1 share ssl

[sdw1] ls /usr/local/

[sdw1] bin games lib libexec sbin src

[sdw1] etc include lib64 openssh-6.5p1 share ssl

[sdw2] ls /usr/local/

[sdw2] bin games lib libexec sbin src

[sdw2] etc include lib64 openssh-6.5p1 share ssl3.4 同步master 配置到各个主机(非官方教程步骤)

本步骤非官方教程内容,官方教程在修改系统参数步骤中 就已经把集群所有主机的配置都改成一致的。本文档中前面修改参数部分,只修改master主机的参数,在本步骤中做集群统一配置。

3.4.1 批量添加gpadmin用户

[root@mdw greenplum-db]# source greenplum_path.sh

[root@mdw greenplum-db]# gpssh -f seg_host -e 'groupadd gpadmin;useradd gpadmin -r -m -g gpadmin;echo "gpadmin" | passwd --stdin gpadmin;'

[root@mdw greenplum-db]# gpssh -f seg_host -e 'ls /home/'3.4.2 打通gpadmin 用户免密登录

## 与旧版本差异点

gp6 之前,gpadmin 用户的 免密登录步骤由gpseginstall 工具自动处理,gp6 需要人工处理。

[root@mdw greenplum-db-6.2.1]# su - gpadmin

[gpadmin@mdw ~]$ source /usr/local/greenplum-db/greenplum_path.sh

[gpadmin@mdw ~]$ ssh-keygen

[gpadmin@mdw ~]$ ssh-copy-id sdw1

[gpadmin@mdw ~]$ ssh-copy-id sdw2

[gpadmin@mdw ~]$ gpssh-exkeys -f /usr/local/greenplum-db/all_host3.4.3 批量设置greenplum在gpadmin用户的环境变量

添加gp的安装目录,和话环境信息到 用户的环境变量中。

编辑.bash_profil 和 .bashrc

cat >> /home/gpadmin/.bash_profile << EOF

source /usr/local/greenplum-db/greenplum_path.sh

EO

#环境变量文件分发到其他节点

gpscp -f /usr/local/greenplum-db/seg_host /home/gpadmin/.bash_profile gpadmin@=:/home/gpadmin/.bash_profile

gpscp -f /usr/local/greenplum-db/seg_host /home/gpadmin/.bashrc gpadmin@=:/home/gpadmin/.bashrc

3.4.4 批量复制系统参数到其他节点

此步骤将前面master上配置的系统参数都分发到集群其他节点上。

# 示例:

su root

gpscp -f seg_host /etc/hosts root@=:/etc/hosts

gpscp -f seg_host /etc/security/limits.conf root@=:/etc/security/limits.conf

gpscp -f seg_host /etc/sysctl.conf root@=:/etc/sysctl.conf

gpscp -f seg_host /etc/security/limits.d/90-nproc.conf root@=:/etc/security/limits.d/90-nproc.conf

gpssh -f seg_host -e '/sbin/blockdev --setra 16384 /dev/sda'

gpssh -f seg_host -e 'echo deadline > /sys/block/sda/queue/scheduler'

gpssh -f seg_host -e 'sysctl -p'

gpssh -f seg_host -e 'reboot'3.5 集群节点安装

## 与旧版本差异点

目前官网缺少这部分说明。 在gp6 之前,有一个工具gpseginstall ,可以安装各个节点的gp软件。根据gpseginstall的日志可以分析出,gpseginstall的主要步骤是:

1. 节点上创建gp用户 (此步骤可略过)

2. 打包主节点安装目录

3. scp到各个seg 服务器

4. 解压,创建软连接

5. 授权给gpamdin gpseginstall 安装日志

3.5.1 模拟gpseginstall 脚本

以下脚本模拟gpseginstall 的主要过程,完成gpsegment的部署

# root 用户下执行

# 变量设置

link_name='greenplum-db' #软连接名

binary_dir_location='/usr/local' #安装路径

binary_dir_name='greenplum-db-6.2.1' #安装目录

binary_path='/usr/local/greenplum-db-6.2.1' #全目录

# master节点上打包

chown -R gpadmin:gpadmin $binary_path

rm -f ${binary_path}.tar; rm -f ${binary_path}.tar.gz

cd $binary_dir_location; tar cf ${binary_dir_name}.tar ${binary_dir_name}

gzip ${binary_path}.tar

# 分发到segment

gpssh -f ${binary_path}/seg_host -e "mkdir -p ${binary_dir_location};rm -rf ${binary_path};rm -rf ${binary_path}.tar;rm -rf ${binary_path}.tar.gz"

gpscp -f ${binary_path}/seg_host ${binary_path}.tar.gz root@=:${binary_path}.tar.gz

gpssh -f ${binary_path}/seg_host -e "cd ${binary_dir_location};gzip -f -d ${binary_path}.tar.gz;tar xf ${binary_path}.tar"

gpssh -f ${binary_path}/seg_host -e "rm -rf ${binary_path}.tar;rm -rf ${binary_path}.tar.gz;rm -f ${binary_dir_location}/${link_name}"

gpssh -f ${binary_path}/seg_host -e ln -fs ${binary_dir_location}/${binary_dir_name} ${binary_dir_location}/${link_name}

gpssh -f ${binary_path}/seg_host -e "chown -R gpadmin:gpadmin ${binary_dir_location}/${link_name};chown -R gpadmin:gpadmin ${binary_dir_location}/${binary_dir_name}"

gpssh -f ${binary_path}/seg_host -e "source ${binary_path}/greenplum_path"

gpssh -f ${binary_path}/seg_host -e "cd ${binary_dir_location};ll"3.5.2 创建集群数据目录

3.5.2.1 创建master 数据目录

mkdir -p /opt/greenplum/data/master

chown gpadmin:gpadmin /opt/greenplum/data/master

#standby 数据目录(本次实验没有standby )

#使用gpssh 远程给standby 创建数据目录

# source /usr/local/greenplum-db/greenplum_path.sh

# gpssh -h smdw -e 'mkdir -p /data/master'

# gpssh -h smdw -e 'chown gpadmin:gpadmin /data/master'3.5.2.2 创建segment 数据目录

本次计划每个主机安装两个 segment,两个mirror.

source /usr/local/greenplum-db/greenplum_path.sh

gpssh -f /usr/local/greenplum-db/seg_host -e 'mkdir -p /opt/greenplum/data1/primary'

gpssh -f /usr/local/greenplum-db/seg_host -e 'mkdir -p /opt/greenplum/data1/mirror'

gpssh -f /usr/local/greenplum-db/seg_host -e 'mkdir -p /opt/greenplum/data2/primary'

gpssh -f /usr/local/greenplum-db/seg_host -e 'mkdir -p /opt/greenplum/data2/mirror'

gpssh -f /usr/local/greenplum-db/seg_host -e 'chown -R gpadmin /opt/greenplum/data*'3.6 集群性能测试

## 与旧版本差异点

gp6 取消了gpcheck 工具。目前可校验的部分是网络和磁盘IO性能。

gpcheck工具可以对gp需要的系统参数,硬件配置进行校验

详情参考官网:https://gpdb.docs.pivotal.io/6-2/install_guide/validate.html#topic1

扩展阅读:https://yq.aliyun.com/articles/230896?spm=a2c4e.11155435.0.0.a9756e1eIiHSoH

个人经验(仅供才考,具体标准 要再找资料):

- 一般来说磁盘要达到2000M/s

- 网络至少1000M/s

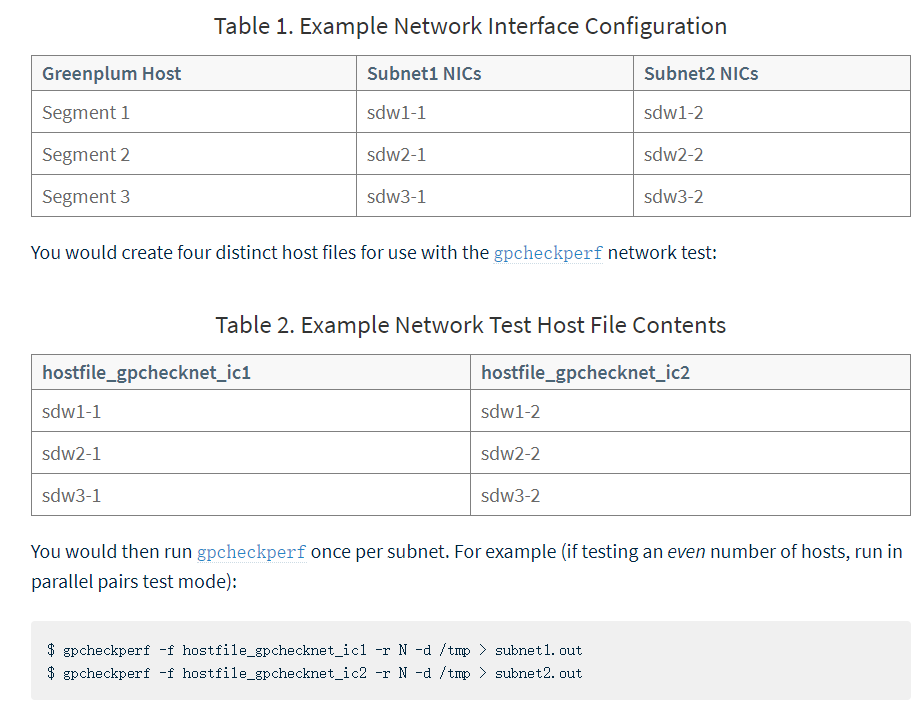

3.6.1 网络性能测试

# 示例

[root@mdw local]# gpcheckperf -f /usr/local/greenplum-db/seg_host -r N -d /tmp

/usr/local/greenplum-db/./bin/gpcheckperf -f /usr/local/greenplum-db/seg_host -r N -d /tmp

-------------------

-- NETPERF TEST

-------------------

Authorized only. All activity will be monitored and reported

NOTICE: -t is deprecated, and has no effect

NOTICE: -f is deprecated, and has no effect

Authorized only. All activity will be monitored and reported

NOTICE: -t is deprecated, and has no effect

NOTICE: -f is deprecated, and has no effect

[Warning] netperf failed on sdw2 -> sdw1

====================

== RESULT 2019-12-18T19:40:30.264321

====================

Netperf bisection bandwidth test

sdw1 -> sdw2 = 2273.930000

Summary:

sum = 2273.93 MB/sec

min = 2273.93 MB/sec

max = 2273.93 MB/sec

avg = 2273.93 MB/sec

median = 2273.93 MB/sec测试发现 netperf failed on sdw2 -> sdw1。检查发现是sdw2的hosts没有配置。 修改下sdw2 的host即可。

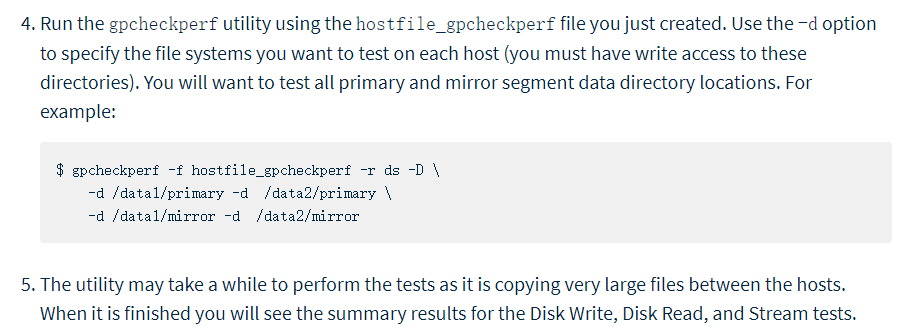

3.6.2 磁盘I/O 性能测试

实验单机装两个seg,但是只有一块盘,所以测试一个目录即可,测试月产生32G的数据,需要留有足够的磁盘空间。

gpcheckperf -f /usr/local/greenplum-db/seg_host -r ds -D -d /opt/greenplum/data1/primary

[root@mdw greenplum-db]# gpcheckperf -f /usr/local/greenplum-db/seg_host -r ds -D -d /opt/greenplum/data1/primary

/usr/local/greenplum-db/./bin/gpcheckperf -f /usr/local/greenplum-db/seg_host -r ds -D -d /opt/greenplum/data1/primary

--------------------

-- DISK WRITE TEST

--------------------

--------------------

-- DISK READ TEST

--------------------

--------------------

-- STREAM TEST

--------------------

====================

== RESULT 2019-12-18T19:59:06.969229

====================

disk write avg time (sec): 47.34

disk write tot bytes: 66904850432

disk write tot bandwidth (MB/s): 1411.59

disk write min bandwidth (MB/s): 555.60 [sdw2]

disk write max bandwidth (MB/s): 855.99 [sdw1]

-- per host bandwidth --

disk write bandwidth (MB/s): 855.99 [sdw1]

disk write bandwidth (MB/s): 555.60 [sdw2]

disk read avg time (sec): 87.33

disk read tot bytes: 66904850432

disk read tot bandwidth (MB/s): 738.54

disk read min bandwidth (MB/s): 331.15 [sdw2]

disk read max bandwidth (MB/s): 407.39 [sdw1]

-- per host bandwidth --

disk read bandwidth (MB/s): 407.39 [sdw1]

disk read bandwidth (MB/s): 331.15 [sdw2]

stream tot bandwidth (MB/s): 12924.30

stream min bandwidth (MB/s): 6451.80 [sdw1]

stream max bandwidth (MB/s): 6472.50 [sdw2]

-- per host bandwidth --

stream bandwidth (MB/s): 6451.80 [sdw1]

stream bandwidth (MB/s): 6472.50 [sdw2]3.6.3 集群时钟校验(非官方步骤)

#验证集群时间,若不一致,需要修改ntp

gpssh -f /usr/local/greenplum-db/all_host -e 'date'4. 集群初始化

官方文档:https://gpdb.docs.pivotal.io/6-2/install_guide/init_gpdb.html

4.1 编写初始化配置文件

4.1.1 拷贝配置文件模板

su - gpadmin

mkdir -p /home/gpadmin/gpconfigs

cp $GPHOME/docs/cli_help/gpconfigs/gpinitsystem_config /home/gpadmin/gpconfigs/gpinitsystem_config4.1.2 根据需要修改参数

注意:To specify PORT_BASE, review the port range specified in the net.ipv4.ip_local_port_range parameter in the /etc/sysctl.conf file.

主要修改的参数:

ARRAY_NAME="Greenplum Data Platform"

SEG_PREFIX=gpseg

PORT_BASE=6000

declare -a DATA_DIRECTORY=(/opt/greenplum/data1/primary /opt/greenplum/data2/primary)

MASTER_HOSTNAME=mdw

MASTER_DIRECTORY=/opt/greenplum/data/master

MASTER_PORT=5432

TRUSTED_SHELL=ssh

CHECK_POINT_SEGMENTS=8

ENCODING=UNICODE

MIRROR_PORT_BASE=7000

declare -a MIRROR_DATA_DIRECTORY=(/opt/greenplum/data1/mirror /opt/greenplum/data2/mirror)

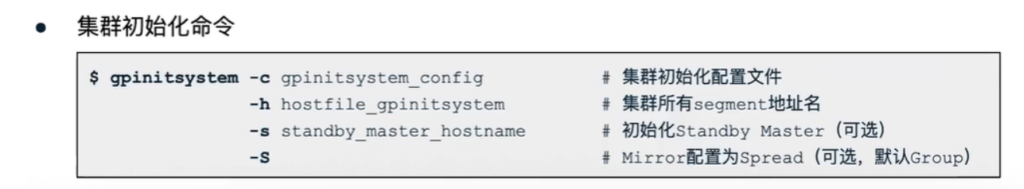

DATABASE_NAME=yjbdw4.2 集群初始化

4.2.1 集群初始化命令参数

# 初始化命令

gpinitsystem -c /home/gpadmin/gpconfigs/gpinitsystem_config -h /usr/local/greenplum-db/seg_host -D4.2.2 执行报错处理

[gpadmin@mdw gpconfigs]$ gpinitsystem -c /home/gpadmin/gpconfigs/gpinitsystem_config -h /usr/local/greenplum-db/seg_host -D

...

/usr/local/greenplum-db/./bin/gpinitsystem: line 244: /tmp/cluster_tmp_file.8070: Permission denied

/bin/mv: cannot stat `/tmp/cluster_tmp_file.8070': Permission denied

...

20191218:20:22:57:008070 gpinitsystem:mdw:gpadmin-[FATAL]:-Unknown host sdw1: ping: icmp open socket: Operation not permitted

unknown host Script Exiting!4.2.2.1 Permission denied 错误 处理

gpssh -f /usr/local/greenplum-db/all_host -e 'chmod 777 /tmp'4.2.2.2 icmp open socket: Operation not permitted 错误处理

gpssh -f /usr/local/greenplum-db/all_host -e 'chmod u+s /bin/ping'4.2.2.3 失败回退

安装中途失败,提示使用 bash /home/gpadmin/gpAdminLogs/backout_gpinitsystem_gpadmin_* 回退,执行该脚本即可,例如:

...

20191218:20:39:53:011405 gpinitsystem:mdw:gpadmin-[FATAL]:-Unknown host gpzq-sh-mb: ping: unknown host gpzq-sh-mb

unknown host Script Exiting!

20191218:20:39:53:011405 gpinitsystem:mdw:gpadmin-[WARN]:-Script has left Greenplum Database in an incomplete state

20191218:20:39:53:011405 gpinitsystem:mdw:gpadmin-[WARN]:-Run command bash /home/gpadmin/gpAdminLogs/backout_gpinitsystem_gpadmin_20191218_203938 to remove these changes

20191218:20:39:53:011405 gpinitsystem:mdw:gpadmin-[INFO]:-Start Function BACKOUT_COMMAND

20191218:20:39:53:011405 gpinitsystem:mdw:gpadmin-[INFO]:-End Function BACKOUT_COMMAND执行清理回退脚本

[gpadmin@mdw gpAdminLogs]$ ls

backout_gpinitsystem_gpadmin_20191218_203938 gpinitsystem_20191218.log

[gpadmin@mdw gpAdminLogs]$ bash backout_gpinitsystem_gpadmin_20191218_203938

Stopping Master instance

waiting for server to shut down.... done

server stopped

Removing Master log file

Removing Master lock files

Removing Master data directory files若执行后仍然未清理干净,可执行一下语句后,再重新安装

pg_ctl -D /opt/greenplum/data/master/gpseg-1 stop

rm -f /tmp/.s.PGSQL.5432 /tmp/.s.PGSQL.5432.lock

rm -Rf /opt/greenplum/data/master/gpseg-14.2.2.4 ping: unknown host gpzq-sh-mb unknown host Script Exiting! 错误

请参考:http://note.youdao.com/noteshare?id=8a72fdf1ec13a1c79b2d795e406b3dd2&sub=313FE99D57C84F2EA498DB6D7B79C7D3

编辑 /home/gpadmin/.gphostcache 文件,为一下内容:

[gpadmin@mdw ~]$ cat .gphostcache

mdw:mdw

sdw1:sdw1

sdw2:sdw24.3 初始化完成后续操作

顺利初始化完成,会 打印出 Greenplum Database instance successfully created。日志生成到/home/gpadmin/gpAdminLogs/ 目录下,命名规则: gpinitsystem_${安装日期}.log日志最后部分如下

...

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[WARN]:-*******************************************************

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[WARN]:-Scan of log file indicates that some warnings or errors

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[WARN]:-were generated during the array creation

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-Please review contents of log file

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-/home/gpadmin/gpAdminLogs/gpinitsystem_20191218.log

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-To determine level of criticality

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-These messages could be from a previous run of the utility

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-that was called today!

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[WARN]:-*******************************************************

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-End Function SCAN_LOG

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-Greenplum Database instance successfully created

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-------------------------------------------------------

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-To complete the environment configuration, please

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-update gpadmin .bashrc file with the following

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-1. Ensure that the greenplum_path.sh file is sourced

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-2. Add "export MASTER_DATA_DIRECTORY=/opt/greenplum/data/master/gpseg-1"

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:- to access the Greenplum scripts for this instance:

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:- or, use -d /opt/greenplum/data/master/gpseg-1 option for the Greenplum scripts

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:- Example gpstate -d /opt/greenplum/data/master/gpseg-1

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-Script log file = /home/gpadmin/gpAdminLogs/gpinitsystem_20191218.log

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-To remove instance, run gpdeletesystem utility

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-To initialize a Standby Master Segment for this Greenplum instance

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-Review options for gpinitstandby

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-------------------------------------------------------

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-The Master /opt/greenplum/data/master/gpseg-1/pg_hba.conf post gpinitsystem

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-has been configured to allow all hosts within this new

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-array to intercommunicate. Any hosts external to this

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-new array must be explicitly added to this file

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-Refer to the Greenplum Admin support guide which is

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-located in the /usr/local/greenplum-db/./docs directory

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-------------------------------------------------------

20191218:20:45:51:013612 gpinitsystem:mdw:gpadmin-[INFO]:-End Main仔细阅读日志最后面的内容,还有几个步骤需要操作。

4.3.1 检查日志内容

#日志中有如下提示:

Scan of log file indicates that some warnings or errors

were generated during the array creation

Please review contents of log file

/home/gpadmin/gpAdminLogs/gpinitsystem_20191218.log检查安装日志错误

#Scan warnings or errors:

cat /home/gpadmin/gpAdminLogs/gpinitsystem_20191218.log|grep -E -i 'WARN|ERROR]'根据日志内容做相应的调整,使集群性能达到最优。

4.3.2 设置环境变量

#编辑gpadmin 用户的环境变量,增加

source /usr/local/greenplum-db/greenplum_path.sh

export MASTER_DATA_DIRECTORY=/opt/greenplum/data/master/gpseg-1 环境变量详情参考:https://gpdb.docs.pivotal.io/510/install_guide/env_var_ref.html

前面已经添加过 source /usr/local/greenplum-db/greenplum_path.sh,此处添加如下内容到.bash_profile和.bashrc:

su - gpadmin

cat >> /home/gpadmin/.bash_profile << EOF

export MASTER_DATA_DIRECTORY=/opt/greenplum/data/master/gpseg-1

export PGPORT=5432

export PGUSER=gpadmin

export PGDATABASE=yjbdw

EOF分发到各个节点

gpscp -f /usr/local/greenplum-db/seg_host /home/gpadmin/.bash_profile gpadmin@=:/home/gpadmin/.bash_profile

gpscp -f /usr/local/greenplum-db/seg_host /home/gpadmin/.bashrc gpadmin@=:/home/gpadmin/.bashrc

gpssh -f /usr/local/greenplum-db/all_host -e 'source /home/gpadmin/.bash_profile;source /home/gpadmin/.bashrc;'4.3.3 若删除重装,使用gpdeletesystem

安装完成,出于种种原因,若需要集群删除重装,使用 gpdeletesystem 工具

详情参考官方文档:https://gpdb.docs.pivotal.io/6-2/utility_guide/ref/gpdeletesystem.html#topic1

使用命令:

gpdeletesystem -d /opt/greenplum/data/master/gpseg-1 -f -d 后面跟 MASTER_DATA_DIRECTORY(master 的数据目录),会清除master,segment所有的数据目录。

-f force, 终止所有进程,强制删除。

[gpadmin@mdw ~]$ gpdeletesystem --help

[gpadmin@mdw ~]$ gpdeletesystem -d /opt/greenplum/data/master/gpseg-1 -f

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Checking for database dump files...

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Getting segment information...

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Greenplum Instance Deletion Parameters

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:---------------------------------------

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Greenplum Master hostname = localhost

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Greenplum Master data directory = /opt/greenplum/data/master/gpseg-1

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Greenplum Master port = 5432

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Greenplum Force delete of dump files = ON

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Batch size = 32

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:---------------------------------------

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:- Segment Instance List

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:---------------------------------------

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Host:Datadir:Port

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-mdw:/opt/greenplum/data/master/gpseg-1:5432

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-sdw1:/opt/greenplum/data1/primary/gpseg0:6000

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-sdw2:/opt/greenplum/data1/mirror/gpseg0:7000

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-sdw1:/opt/greenplum/data2/primary/gpseg1:6001

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-sdw2:/opt/greenplum/data2/mirror/gpseg1:7001

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-sdw2:/opt/greenplum/data1/primary/gpseg2:6000

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-sdw1:/opt/greenplum/data1/mirror/gpseg2:7000

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-sdw2:/opt/greenplum/data2/primary/gpseg3:6001

20191219:09:47:57:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-sdw1:/opt/greenplum/data2/mirror/gpseg3:7001

Continue with Greenplum instance deletion? Yy|Nn (default=N):

> y

20191219:09:48:01:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-FINAL WARNING, you are about to delete the Greenplum instance

20191219:09:48:01:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-on master host localhost.

20191219:09:48:01:020973 gpdeletesystem:mdw:gpadmin-[WARNING]:-There are database dump files, these will be DELETED if you continue!

Continue with Greenplum instance deletion? Yy|Nn (default=N):

> y

20191219:09:48:15:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Stopping database...

20191219:09:48:17:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Deleting tablespace directories...

20191219:09:48:17:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Waiting for worker threads to delete tablespace dirs...

20191219:09:48:17:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Deleting segments and removing data directories...

20191219:09:48:17:020973 gpdeletesystem:mdw:gpadmin-[INFO]:-Waiting for worker threads to complete...

20191219:09:48:18:020973 gpdeletesystem:mdw:gpadmin-[WARNING]:-Delete system completed but warnings were generated.删除完成后再根据自己需要,调整集群初始化配置文件,并重新初始化。

vi /home/gpadmin/gpconfigs/gpinitsystem_config

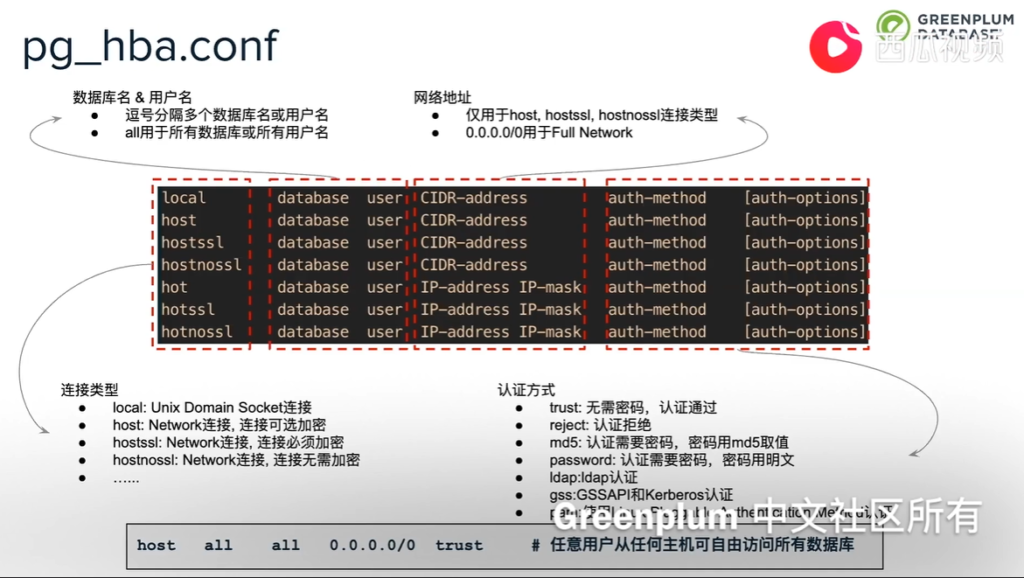

gpinitsystem -c /home/gpadmin/gpconfigs/gpinitsystem_config -h /usr/local/greenplum-db/seg_host -D4.3.4 配置pg_hba.conf

#根据访问需要 ,配置pg_hba.conf。

/opt/greenplum/data/master/gpseg-1/pg_hba.conf

#详情参考后文:5.2.1. 配置 pg_hba.conf5 安装成功后配置

5.1 psql 登陆gp 并设置密码

是用psql 登录gp, 一般命令格式为:

psql -h hostname -p port -d database -U user -W password -h后面接对应的master或者segment主机名

-p后面接master或者segment的端口号

-d后面接数据库名

可将上述参数配置到用户环境变量中,linux 中使用gpadmin用户不需要密码。psql 登录,并设置gpadmin用户密码示例:

[gpadmin@mdw gpconfigs]$ psql

psql (9.4.24)

Type "help" for help.

yjbdw=# ALTER USER gpadmin WITH PASSWORD 'gpadmin';

ALTER ROLE

yjbdw=# \q5.1.1 登陆到不同节点

#参数示意:

#登陆主节点

[gpadmin@mdw gpconfigs]$ PGOPTIONS='-c gp_session_role=utility' psql -h mdw -p5432 -d postgres

#登陆到segment,需要指定segment 端口。

[gpadmin@mdw gpconfigs]$ PGOPTIONS='-c gp_session_role=utility' psql -h sdw1 -p6000 -d postgres5.2 客户端登陆gp

5.2 客户端登陆gp

- 配置 pg_hba.conf

- 配置 postgresql.conf

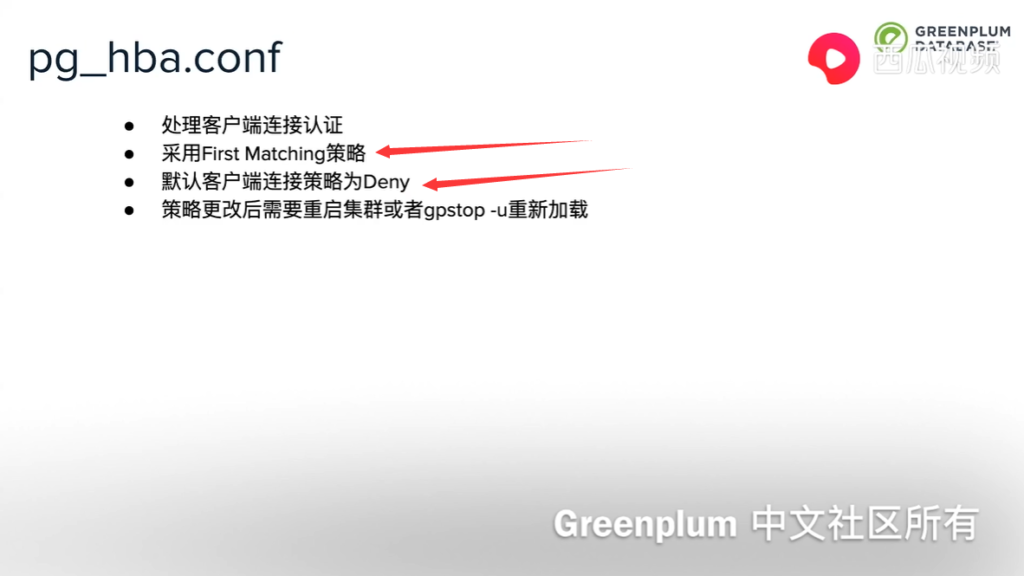

5.2.1. 配置 pg_hba.conf

参考配置说明:https://blog.csdn.net/yaoqiancuo3276/article/details/80404883

# 示例

vi /opt/greenplum523/data/master/gpseg-1/pg_hba.conf

# TYPE DATABASE USER ADDRESS METHOD

# "local" is for Unix domain socket connections only

# IPv4 local connections:

# IPv6 local connections:

local all gpadmin ident

host all gpadmin 127.0.0.1/28 trust

host all gpadmin 172.28.25.204/32 trust

host all gpadmin 0.0.0.0/0 md5 # 新增规则允许任意ip 密码登陆

host all gpadmin ::1/128 trust

host all gpadmin fe80::250:56ff:fe91:63fc/128 trust

local replication gpadmin ident

host replication gpadmin samenet trust5.2.2. 修改postgresql.conf

postgresql.conf里的监听地址设置为:listen_addresses = ‘*’ # 允许监听任意ip gp6.0 默认会设置这个参数为 listen_addresses = ‘*’ vi /opt/greenplum523/data/master/gpseg-1/postgresql.conf

5.2.3. 加载修改的文件

gpstop -u 5.2.4 客户端登陆

根据安装的信息,是用pgadmin4,或者 navicat等工具登录即可。

安装至此结束

作者简介

梁鸿超:热衷IT技术,擅长数据分析,数仓建设,目前在学习构建实时数仓。供职于证券行业,从事大数据开发。